3 Information Disorder, Truth, and Trust

“We tend to think that we have rational relationships to information, but we don’t. We have emotional relationships to information, which is why the most effective disinformation draws on our underlying fears and worldviews….We’re less likely to be critical of information that reinforces our worldview or taps into our deep-seated emotional responses” (Wardle, qtd. in Vongkiatkajorn; emphasis added).

Background

In an information environment shaped by algorithms, the attention economy, engagement, and polarization, how do we determine truth? How do we know which sources of information to trust? These questions are becoming increasingly difficult to answer, and even more so as “disinformation that is designed to provoke an emotional reaction can flourish in these spaces” (Wardle).

Indeed, in 2016, Oxford Dictionaries selected post-truth as the Word of the Year, defining this as: “relating to or denoting circumstances in which objective facts are less influential in shaping public opinion than appeals to emotion and personal belief.” To learn more about how this emotional appeal causes unconscious defensiveness when an opinion gets challenged (called the backfire effect), check out the Oatmeal’s You’re Not Going to Believe What I’m About to Tell You.

Trust

A 2020 study from Project Information Literacy confirms that the way information is delivered today—with opinion and propaganda mingled with traditional news sources, and with algorithms highlighting sources based on engagement rather than quality—has left many college students concerned about the trustworthiness of online content. Students reported that it was difficult to know where to place their trust when credible sources are buried by a deluge of poorer-quality content and misinformation. One student noted that “it’s not that we’re lacking credible information. It’s that we’re drowning in, like, a sea of all these different points out there” (Head, et al. 20).

“This is happening at a time when falsehoods proliferate and trust in truth-seeking institutions is being undermined. Even the very existence of truth itself has come into question….People no longer know what to believe or on what grounds we can determine what is true” (Head, et al. 11, 36).

Essential Definitions

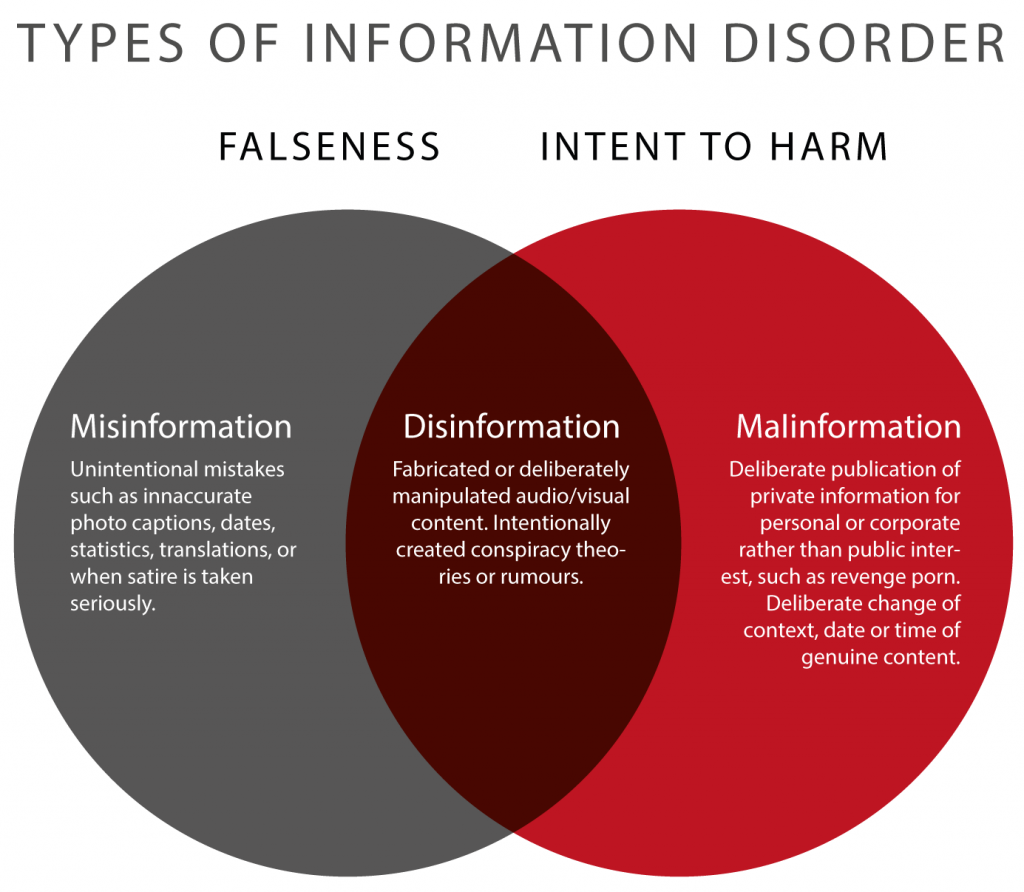

First, it is important to establish a shared vocabulary and terminology so that we can better understand and discuss these concepts. Claire Wardle, a world-renowned expert in the areas social media, user generated content, and verification, has used “information disorder” as an umbrella term for the various types of false, misleading, manipulated, or deceptive information we have seen flourish in recent years. She also created an essential glossary for information disorder, with definitions for related words and phrases. For example, you will find helpful definitions for terms like algorithm, bots, data mining, deepfakes, doxing, sock puppet, and trolling.

The graphic below illustrates the scale and range of intent behind false information, from unintentionally inaccurate to deliberately deceptive and harmful. For a much more detailed explanation of each form of information disorder, from “satire” to “fabricated content” to “false context,” see First Draft’s Essential Guide to Understanding Information Disorder.

Concept Review Exercise: Information Disorder

Sources

This section includes material from the source book, Introduction to College Research, as well as the following:

“First Draft’s Essential Guide to Understanding Information Disorder” by Claire Wardle is licensed under CC BY-NC-ND 4.0.

Head, Alison J., Barbara Fister, and Margy MacMillan. “Information Literacy in the Age of Algorithms.” Project Information Literacy, 15 Jan. 2020. Licensed under CC BY-NC-SA 4.0.

Image: “3 Types of Information Disorder” graphic by Claire Wardle & Hossein Derakshan is licensed under CC BY-NC-ND 3.0.

Image: “Cognition and Emotion” by ElisaRiva on Pixabay.

Image: “Line Mind” by ElisaRiva on Pixabay.

Inman, Matthew. “You’re Not Going to Believe What I’m About to Tell You.” The Oatmeal, 2020.

“Oxford Word of the Year 2016.” Oxford Languages, Oxford University Press.

Vongkiatkajorn, Kanyakrit. “Here’s How You Can Fight Back Against Disinformation.” Mother Jones, 9. Aug. 2018.

Wardle, Claire. “Information Disorder, Part 1: The Essential Glossary.” First Draft, 9 July 2018.

Original material by book author Calantha Tillotson.

"An algorithm is a fixed series of steps that a computer performs in order to solve a problem or complete a task. Social media platforms use algorithms to filter and prioritize content for each individual user based on various indicators, such as their viewing behavior and content preferences. Disinformation that is designed to provoke an emotional reaction can flourish in these spaces when algorithms detect that a user is more likely to engage with or react to similar content." Definition from "Information Disorder: The Essential Glossary" by Claire Wardle.

"Social media accounts that are operated entirely by computer programs and are designed to generate posts and/or engage with content on a particular platform. In disinformation campaigns, bots can be used to draw attention to misleading narratives, to hijack platforms’ trending lists, and to create the illusion of public discussion and support." Definition from "Information Disorder: The Essential Glossary" by Claire Wardle.

"The process of monitoring large volumes of data by combining tools from statistics and artificial intelligence to recognize useful patterns. Through collecting information about an individual’s activity, disinformation agents have a mechanism by which they can target users on the basis of their posts, likes and browsing history. A common fear among researchers is that, as psychological profiles fed by data mining become more sophisticated, users could be targeted based on how susceptible they are to believing certain false narratives." Definition from "Information Disorder: The Essential Glossary" by Claire Wardle.

"The term currently being used to describe fabricated media produced using artificial intelligence. By synthesizing different elements of existing video or audio files, AI enables relatively easy methods for creating ‘new’ content, in which individuals appear to speak words and perform actions, which are not based on reality." Definition from "Information Disorder: The Essential Glossary" by Claire Wardle.

"The act of publishing private or identifying information about an individual online, without his or her permission. This information can include full names, addresses, phone numbers, photos, and more. Doxing is an example of malinformation, which is accurate information shared publicly to cause harm." Definition from "Information Disorder: The Essential Glossary" by Claire Wardle.

"Online account that uses a false identity designed specifically to deceive. Sock puppets are used on social platforms to inflate another account’s follower numbers and to spread or amplify false information to a mass audience." Definition from "Information Disorder: The Essential Glossary" by Claire Wardle.

"The act of deliberately posting offensive or inflammatory content to an online community with the intent of provoking readers or disrupting conversation." Definition from "Information Disorder: The Essential Glossary" by Claire Wardle.