6 z-scores and the Standard Normal Distribution

Learning Outcomes:

In this chapter, you will learn how to:

- Identify uses for z-score

- Compute and transform z-scores and x-values

- Describe the effects of standardizing a distribution

- Identify the z-score location on a normal distribution

- Discuss the characteristics of a normal distribution

We now understand how to describe and present our data visually and numerically. These simple tools, and the principles behind them, will help you interpret information presented to you and understand the basics of a variable. Moving forward, we now turn our attention to how scores within a distribution are related to one another, how to precisely describe a score’s location within the distribution, and how to compare scores from different distributions.

Normal Distributions

The normal distribution is the most important and most widely used distribution in statistics. It is sometimes called the “bell curve”. It is also called the “Gaussian curve” after the mathematician Karl Friedrich Gauss.

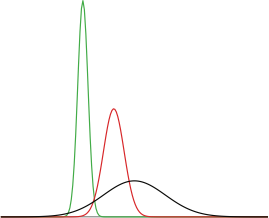

Strictly speaking, it is not correct to talk about the normal distribution since there are many normal distributions. Normal distributions can differ in their means and in their standard deviations. Figure 1 shows three normal distributions. The green (left-most) distribution has a mean of -3 and a standard deviation of 0.5, the distribution in red (the middle distribution) has a mean of 0 and a standard deviation of 1, and the distribution in black (right-most) has a mean of 2 and a standard deviation of 3. These as well as all other normal distributions are symmetric with relatively more values at the center of the distribution and relatively few in the tails. What is consistent about all normal distributions is the shape and the proportion of scores within a given distance along the x-axis. We will focus on the Standard Normal Distribution (also known as the Unit Normal Distribution), which has a mean of 0 and a standard deviation of 1 (i.e., the red distribution in Figure 1).

Figure 1. Normal distributions differing in mean and standard deviation.

Eight features of normal distributions are listed below.

- Normal distributions are symmetric around their mean.

- The mean, median, and mode of a normal distribution are equal.

- The area under the normal curve is equal to 1.0.

- Normal distributions are denser in the center and less dense in the tails.

- Normal distributions represent populations and are defined by two parameters, the mean (μ) and the standard deviation (σ).

- Approximately 68% of the area of a normal distribution is within one standard deviation of the mean.

- Approximately 95% of the area of a normal distribution is within two standard deviations of the mean.

- Approximately 99.7% of the area of a normal distribution is within three standard deviations of the mean.

These properties enable us to use the normal distribution to understand how scores relate to one another within and across a distribution. But first, we need to learn how to calculate the standardized scores that make up a standard normal distribution.

z-scores

A z-score is a standardized version of a raw score (x) that gives information about the relative location of that score within its distribution. The formula for converting a raw score into a z-score is found in the box below.

Transforming a Raw Score to a z-score

Values from a Population:

[latex]z=\frac{X − 𝜇}{𝜎}[/latex]

Values from a Sample:

[latex]z=\frac{X − M}{𝑠}[/latex]

Notice that the only real difference is the notation. The calculations are exactly the same. The numerator is the difference between the score and the mean. The denominator is the standard deviation.

As we learned in previous chapters, the mean tells us the center of a distribution and the standard deviation tells us how spread out the scores are in a distribution. Thus, z-scores will tell us how far the score is away from the center or mean in units of standard deviations (that is, how many standard deviations away) and in what direction (the sign of the z-score).

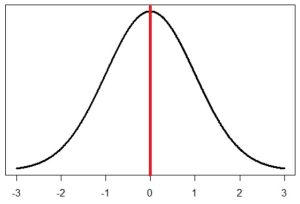

So, to say that all again. The value of a z-score has two parts: the sign (positive or negative) and the magnitude (the actual number). The sign of the z-score tells you in which half of the distribution the z-score falls: a positive sign (or no sign) indicates that the score is above the mean and on the right hand-side or upper end of the distribution, and a negative sign tells you the score is below the mean and on the left-hand side or lower end of the distribution. The magnitude of the number tells you, in units of standard deviations, how far away the score is from the center or mean. The magnitude can take on any value between negative and positive infinity, but for reasons we will see soon, they generally fall between -3 and 3. Also, note that if the X is the same as the mean, the z-score will equal zero. In other words, a z-score of zero means that the X is equal to the mean, or is zero standard deviations away from the mean.

Figure 2. Normal z-distribution with z = 0 represented.

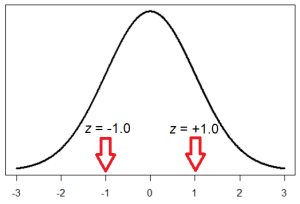

Let’s look at some more examples. A z-score value of -1.0 tells us that this z-score is 1 standard deviation (because of the magnitude 1.0) below (because of the negative sign) the mean. Similarly, a z-score value of 1.0 tells us that this z-score is 1 standard deviation above the mean. Thus, these two scores are the same distance away from the mean but in opposite directions.

Figure 3. Normal z-distribution with z = +1.0 and z = -1.0 represented.

A z-score of -2.5 is two-and-a-half standard deviations below the mean and is therefore farther from the center than both of the previous scores, and a z-score of 0.25 is closer than all of the ones before. In later chapters, we will learn to formalize the distinction between what we consider “close” to the center or “far” from the center. For now, we will use a rough cut-off of 1.5 standard deviations in either direction as the difference between close scores (those within 1.5 standard deviations or between z = -1.5 and z = 1.5) and extreme scores (those farther than 1.5 standard deviations – below z = -1.5 or above z = 1.5).

z-scores and Relative Location

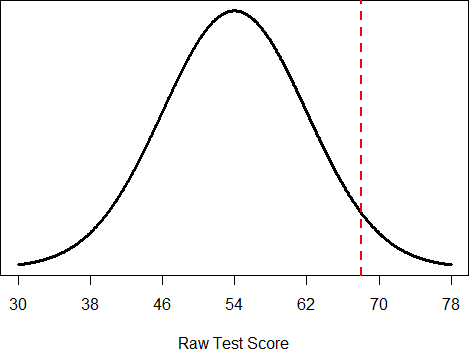

We can use z-scores to standardize a distribution. We can also convert raw scores into z-scores to get a better idea of where in the distribution those scores fall, in other words, to provide a relative location. Let’s say we get a score of 68 on an exam. We may be disappointed to have scored so low, but perhaps it was just a very hard exam. Having information about the distribution of all scores in the class would be helpful to give us some perspective on our score. We find out that the class got an average score of 54 with a standard deviation of 8. To find out our relative location within this distribution, we simply convert our test score into a z-score.

[latex]z=\frac{𝑋 − 𝜇}{𝜎}=\frac{68 − 54}{8}=1.75[/latex]

We find that we are 1.75 standard deviations above the average, above our rough cut off for close and far. Suddenly our 68 is looking pretty good!

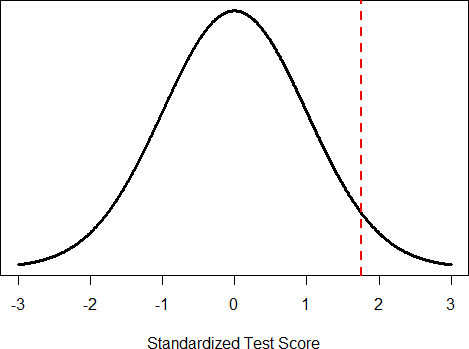

Figure 4. Raw and standardized versions of a single score

Figure 4. Raw and standardized versions of a single score

Figure 4 shows both the raw score and the z-score on their respective distributions. Notice that the red line indicating where each score lies is in the same relative spot for both. This is because transforming a raw score into a z-score does not change its relative location, it only makes it easier to know precisely where it is.

Z-scores are also useful for comparing scores from different distributions. Let’s say we take the SAT and score 501 on both the math and critical reading sections. Does that mean we did equally well on both? Scores on the math portion are distributed normally with a mean of 511 and standard deviation of 120, so our z- score on the math section is:

[latex]^Z𝑚𝑎𝑡ℎ =\frac{501 − 511}{120}=−0.08[/latex]

which is just slightly below average (note that use of “math” as a subscript; subscripts are used when presenting multiple versions of the same statistic in order to know which one is which and have no bearing on the actual calculation). The critical reading section has a mean of 495 and standard deviation of 116, so:

[latex]^Z𝐶𝑅 =\frac{501 − 495}{116}= 0.05[/latex]

So, even though we were almost exactly average on both tests, we did a little bit better on the critical reading portion relative to other people.

Finally, z-scores are incredibly useful if we need to combine information from different measures that are on different value ranges. Let’s say we give a set of employees a series of tests on things like job knowledge, personality, and leadership. We may want to combine these into a single score we can use to rate employees for development or promotion, but look what happens when we take the mean of raw scores from different value ranges, as shown in Table 1:

|

Raw Scores |

Job Knowledge (0 – 100) |

Personality (1 –5) |

Leadership (1 – 5) |

Mean |

|

Employee 1 |

98 |

4.2 |

1.1 |

34.43 |

|

Employee 2 |

96 |

3.1 |

4.5 |

34.53 |

|

Employee 3 |

97 |

2.9 |

3.6 |

34.50 |

Table 1. Raw test scores (possible value ranges in parentheses).

Because the job knowledge scores were so big and the scores were so similar, they overpowered the other scores and removed almost all variability between the means. This is why you should not find a mean for tests measured on different possible value ranges.

However, if we standardize these scores into z-scores, we can more accurately assess differences between employees, as shown in Table 2. And, we can take a meaningful average (or mean) of the z-scores across the three different employee measures.

|

z-scores |

Job Knowledge |

Personality |

Leadership |

Mean |

|

Employee 1 |

1.00 |

1.14 |

-1.12 |

0.34 |

|

Employee 2 |

-1.00 |

-0.43 |

0.81 |

-0.20 |

|

Employee 3 |

0.00 |

-0.71 |

0.30 |

-0.14 |

Table 2. Standardized scores.

Creating a new distribution

Another convenient characteristic of z-scores is that they can be transformed into any normal distribution that we would like. This can be very useful if we don’t want to work with negative numbers or if we have a specific distribution we would like to work with.

Transforming a z-score to a Raw Score

Values from a Population:

[latex]X = 𝑧𝜎 + 𝜇[/latex]

Values from a Sample:

[latex]X = 𝑧𝑠 + M[/latex]

Notice that these are just simple rearrangements of the original formulas for calculating z-scores from raw scores.

Let’s say we create a new measure of intelligence, and initial calibration finds that our scores have a mean of 40 and standard deviation of 7. Three people who have scores of 52, 43, and 34 want to know what this means in terms of their level of intelligence (i.e., IQ score). We first need to convert their raw scores on our test into z-scores:

[latex]z=\frac{52 − 40}{7}=1.71[/latex]

[latex]𝑧=\frac{43 − 40}{7}=0.43[/latex]

[latex]𝑧=\frac{34 − 40}{7}=−0.80[/latex]

While these z-scores will tell them how they did on our measure, it does not provide them with how they performed compared to the well-known, standard measure of IQ. So, we can translate these z- scores into the more familiar metric of IQ scores, which have a mean of 100 and standard deviation of 15:

[latex]IQ = 1.71 ∗ 15 + 100 = 125.65[/latex]

[latex]IQ = 0.43 ∗ 15 + 100 = 106.45[/latex]

[latex]IQ = −0.80 ∗ 15 + 100 = 88.00[/latex]

We would also likely round these values to 126, 107, and 88, respectively, for convenience. This sort of transformation allows us to take scores and make them more meaningful for different audiences or to make them comparable to other distributions.

Z-scores and the Area under the Curve

Z-scores and the standard normal distribution go hand-in-hand. A z-score will tell you exactly where in the standard normal distribution a value is located, and any normal distribution can be converted into a standard normal distribution (AKA z-score distribution) by converting all of the scores in the distribution into z-scores, a process known as standardization.

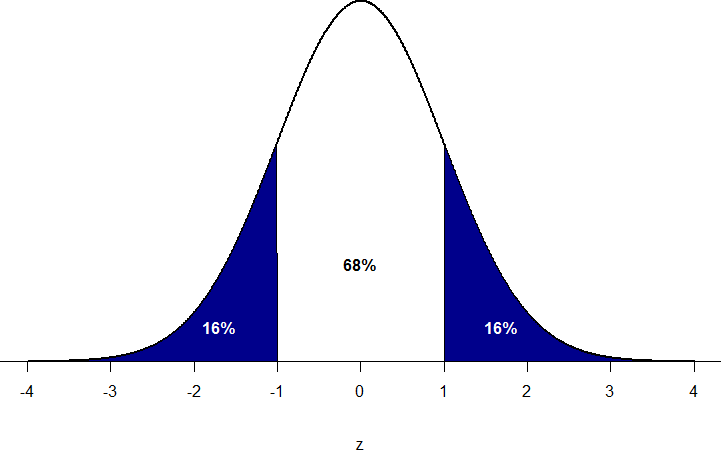

We saw in the previous chapter that standard deviations can be used to divide the normal distribution: about 68% of the distribution falls within 1 standard deviation of the mean, about 95% within 2 standard deviations, and about 99.7% within 3 standard deviations. Because z-scores are in units of standard deviations, this means that about 68% of scores fall between z = -1.0 and z = 1.0, and so on. We call this 68% (or any percentage we have based on our z-scores) the proportion of the area under the curve. Any area under the curve is bounded by (defined by, delineated by, etc.) a single z-score or pair of z-scores.

An important property to point out here is that, by virtue of the fact that the total area under the curve of a distribution is always equal to 1.0, these areas under the curve can be added together or subtracted from 1 to find the proportion in other areas. For example, we know that the area between z = -1.0 and z = 1.0 (i.e., within one standard deviation of the mean) contains 68.26% of the area under the curve, which can be represented in decimal form at 0.6826 (to change a percentage to a decimal, simply move the decimal point 2 places to the left). Because the total area under the curve is equal to 1.0, that means that the proportion of the area outside z= -1.0 and z = 1.0 is equal to 1.0 – 0.6826 = 0.3174 or 32% (see Figure 5 below). This area is called the tails of the distribution. Because this area is split between two tails and because the normal distribution is symmetrical, each tail has exactly one-half, or about 16%, of the area under the curve.

Figure 5. Shaded areas represent the area under the curve in the tails

We will have much more to say about this concept in the coming chapters. As it turns out, this is a quite powerful idea that enables us to make statements about how likely an outcome is and what that means for research questions we would like to answer and hypotheses we would like to test. But first, we need to make a brief foray into some ideas about probability in our next chapters.

A standardized version of a raw score (x) that gives the relative location of that score within its distribution in terms of the mean and standard deviation of the distribution.