9 Current and Emerging Trends in AI/ML

Learning Objectives

- Analyze and explain the concept of generative AI, including its potential benefits and ethical considerations.

- Evaluate the challenges related to algorithmic accountability in AI decisions and actions.

- Understand the potential of quantum machine learning and its current development challenges.

- Apply knowledge of federated learning to discuss its potential benefits and challenges in a real-world context

- Describe the role of AI agents, multimodal models, and retrieval-augmented generation in advancing enterprise and consumer applications.

- Evaluate the impact of regulatory and open-source developments on responsible AI deployment.

Current and Emerging Trends in AI Development

The future of AI/ML is marked by exciting and transformative trends and technologies that continue to evolve. Here are some of the emerging developments in the field:

Advances in Generative AI

Generative AI has rapidly evolved beyond text generation to include the creation of high-quality images, videos, audio, and code—enabled by the latest models from OpenAI, Google, Anthropic, and emerging multimodal platforms like Sora and Pika Labs. These systems can produce human-like content across multiple formats, opening up transformative possibilities in business, media, education, and entertainment.

In content creation, generative AI assists writers, marketers, and educators by drafting text, refining tone, suggesting structure, and even co-authoring complex narratives. Journalists use these tools to summarize interviews or generate data-driven articles, while students and instructors leverage AI to personalize learning content and generate study aids. Visual content generation has also matured significantly—AI tools can now produce photorealistic images, storyboards, and promotional materials from a simple text prompt.

Creative industries such as advertising, film, and gaming are harnessing generative AI for concept design, script generation, virtual avatars, and interactive environments. Filmmakers use AI to generate rough cuts, simulate lighting effects, or create synthetic characters. In gaming and simulation, AI generates immersive environments, non-player character (NPC) dialogue, and procedurally generated assets, accelerating development and enabling greater interactivity.

Natural Language Processing (NLP) has also advanced significantly beyond traditional text-based tasks, evolving into a more dynamic, multimodal, and context-aware capability. Modern NLP systems can now interpret not only written language but also spoken inputs, emotional tone, visual cues, and user intent—enabling a deeper understanding of human communication. This has led to major improvements in sentiment analysis, where models can detect sarcasm, mood shifts, and subtle emotional nuance—a field often referred to as vibe detection. Virtual assistants and chatbots now operate across multiple modalities and maintain context over long, multi-turn conversations or interactions that span time and device. For example, an AI assistant might recognize a customer’s frustrated tone from audio input, reference their previous text chats, and tailor its response accordingly. These advances are making conversational AI more natural, adaptive, and empathetic, with applications in customer service, mental health support, education, and workplace collaboration.

Generative AI also plays a growing role in virtual and augmented reality (VR/AR), where it dynamically generates environments and interactions, reducing the need for hand-crafted assets. This is expanding applications in areas like architectural visualization, medical training simulations, and immersive marketing experiences.

Despite its power, generative AI presents serious ethical and regulatory challenges. It raises complex issues related to intellectual property, deepfakes, disinformation, bias, and authenticity. The ability to generate lifelike but synthetic media has already led to misuse in political propaganda, scams, and misinformation campaigns. As a result, regulators and platform providers are increasingly implementing safeguards such as watermarking, provenance metadata, and content moderation policies to mitigate harm.

In summary, generative AI is reshaping the creative and professional landscape by dramatically lowering the cost and time required to produce high-quality content. However, realizing its full potential requires robust frameworks for ethical use, transparency, and accountability in how AI-generated content is produced, attributed, and consumed.

Explainable AI (XAI)

As artificial intelligence systems become more advanced and deeply embedded in critical business and public-sector decisions, the demand for transparency and interpretability has intensified. Explainable AI (XAI) refers to a suite of tools and methodologies designed to make the outputs of machine learning models understandable to humans—particularly to stakeholders who may not be AI experts. This is essential for building trust, ensuring regulatory compliance, and facilitating meaningful human oversight.

Since 2024, the field of XAI has moved beyond technical visualization tools to include context-sensitive, role-based explanations. In practice, this means that explanations are now tailored to specific users (e.g., a patient, a compliance officer, or a product manager) rather than delivered in one-size-fits-all formats. Moreover, explainability is increasingly integrated into the user interfaces of AI-powered systems—such as AI copilots in enterprise platforms—allowing users to query why a recommendation was made and how specific inputs influenced the outcome.

Real-world applications continue to expand. In healthcare, explainable AI helps clinicians assess the rationale behind diagnostic models or treatment suggestions, supporting more informed and accountable medical decisions. In finance, XAI is embedded into credit scoring systems and fraud detection dashboards to meet regulatory standards (such as the EU AI Act or U.S. AI accountability frameworks). In HR, XAI helps mitigate bias in hiring algorithms by surfacing the factors behind candidate evaluations.

New methods such as counterfactual explanations, causal modeling, and interactive dashboards are increasingly replacing static feature-importance charts. These innovations allow users to explore “what-if” scenarios, test assumptions, and better understand the system’s boundaries and sensitivities.

Ultimately, XAI is a cornerstone of responsible AI. By enabling domain experts, regulators, and everyday users to interrogate AI decisions, it fosters transparency, improves outcomes, and ensures that AI systems remain aligned with human values and institutional goals.

AI REASONING MODELS

As artificial intelligence matures, a growing emphasis is being placed on not just what models can output, but how they think. Traditional machine learning systems excel at recognizing patterns in large datasets, but they often struggle with multi-step reasoning, contextual judgment, or applying knowledge to novel scenarios. To address these limitations, reasoning models are being developed to mimic more structured, interpretable, and goal-directed forms of cognition.

In the context of AI, reasoning refers to the process by which a system applies logic, inference, and structured steps to reach a conclusion or solve a problem. Unlike basic predictive models that provide outputs based on statistical correlations, reasoning models attempt to emulate aspects of human problem-solving, such as:

-

Deductive reasoning: deriving specific conclusions from general rules

-

Inductive reasoning: generalizing from specific examples

-

Abductive reasoning: inferring the most likely explanation

-

Causal reasoning: understanding relationships between causes and effects

Advancements in AI Reasoning Techniques

Since 2023, significant progress has been made in integrating reasoning capabilities into large language models (LLMs) and AI systems. Notable techniques include:

-

Chain-of-Thought Prompting: A method that guides language models to articulate intermediate reasoning steps before producing a final answer. This approach significantly improves performance on tasks requiring arithmetic, logic, or structured decision-making.

-

Tool-Augmented Reasoning: AI models are increasingly paired with external tools—such as calculators, databases, or search engines—to support factual accuracy and procedural logic. For example, an LLM solving a math problem may invoke a Python interpreter to verify a calculation.

-

Retrieval-Augmented Reasoning: Combining large language models with knowledge retrieval systems enables real-time access to relevant facts, documents, or prior cases. This reduces hallucinations and supports grounded decision-making in dynamic contexts.

-

Neuro-symbolic Models: These hybrid systems blend neural networks (which learn patterns) with symbolic logic systems (which apply explicit rules and structures), offering both generalization and formal reasoning capabilities.

These advances are making it possible for AI systems to perform tasks that were previously limited to domain experts, such as diagnosing medical conditions, conducting legal research, or drafting strategic business plans.

Why Reasoning Matters

Robust reasoning capabilities enable AI to operate more reliably in real-world settings—especially in high-stakes domains where transparency, correctness, and justification are critical. Reasoning models can provide:

-

Traceable logic behind a decision or recommendation (a key benefit for explainable AI)

-

Improved generalization across contexts or unfamiliar scenarios

-

Higher trust from end-users and regulators who seek to understand not just what AI predicts, but why

Furthermore, reasoning is foundational for AI agents that must break down goals, execute sequential tasks, and adapt based on changing information—a direct link to the next stage in AI system design.

Case Study: Using a Reasoning Model to Develop a Market Entry Strategy

Scenario: A mid-sized U.S. software company, DataNova, is considering expansion into the Southeast Asian market with its AI-based customer relationship management (CRM) platform. The executive team wants to develop a data-informed market entry strategy, considering factors like regional demand, competitive landscape, regulatory risks, and pricing strategy.

Challenge: The decision requires synthesizing diverse information—market data, local business practices, competitive positioning, legal constraints, and logistical feasibility—and then reasoning through multiple strategic options under uncertainty.

AI Application: Reasoning Model-Driven Strategic Planning

To support the process, DataNova deploys a chain-of-thought–enabled reasoning model integrated with retrieval tools and data visualization APIs. The system operates in several stages:

-

Initial Query and Decomposition:

Executives prompt the system:

“What are the top three market entry strategies for our CRM platform in Southeast Asia, given our mid-market focus and privacy-sensitive features?”

The model decomposes the problem into subcomponents:

-

Market segmentation

-

Competitive mapping

-

Regulatory barriers

-

Go-to-market channel analysis

-

Pricing adaptation

-

-

Data Retrieval and Evaluation: Using retrieval-augmented generation (RAG), the model pulls current market reports, competitor offerings, and regional policy summaries from trusted databases and public filings (e.g., ASEAN publications, Gartner reports, local chambers of commerce).

-

Reasoning and Synthesis: The model applies structured reasoning to compare entry options:

-

Direct entry vs. joint venture vs. local distributor

-

Analyzes tradeoffs in speed, risk, margin, and brand control

-

Uses causal logic to anticipate regulatory consequences based on business model choices (e.g., SaaS vs. on-premise deployment)

-

-

Output and Recommendations: The system generates a strategic memo outlining:

-

A primary recommendation: Partner with a Singapore-based SaaS reseller for initial entry, due to infrastructure maturity and alignment with GDPR-like data privacy norms.

-

Supporting rationale for each step, including a SWOT-style matrix comparing entry options.

-

Suggested KPIs for the first 12 months of implementation, based on benchmarks from regional case studies.

-

Outcome: The executive team uses the model’s structured analysis as a starting point for internal strategy discussions. They adapt the recommendations based on internal risk appetite and allocate resources accordingly. The reasoning model is later used to simulate additional scenarios (e.g., regional expansion to Vietnam or Indonesia in Phase II), helping create a phased, evidence-backed growth plan.

Takeaway: This case illustrates how reasoning models can support high-level strategic decision-making by breaking down complex problems, integrating structured logic with live data retrieval, and presenting outputs in a format suitable for executive review. Unlike static reports or black-box analytics, these systems offer transparency, adaptability, and context-aware recommendations—making them a valuable complement to human strategic insight.

Challenges and Research Frontiers

Despite recent progress, reasoning in AI is still a developing field. Language models can simulate reasoning but may lack true understanding or consistency across steps. Complex reasoning tasks often require integrating multiple types of knowledge, managing long-term memory, and coordinating between models and tools—all of which remain open research challenges.

Efforts are also underway to benchmark AI reasoning using datasets like BIG-Bench, MATH, and ARC-Challenge, and to explore how reasoning models can interact with humans in collaborative problem-solving environments.

Conclusion

Reasoning models represent a critical step toward more capable, interpretable, and human-aligned AI systems. As AI moves beyond pattern recognition and toward autonomous task execution, the ability to reason—logically, causally, and adaptively—will define the next generation of intelligent tools and agents. These models not only enhance accuracy and transparency but also lay the groundwork for AI systems that can truly collaborate with humans in decision-making and complex problem-solving.

AI AGENTS AND AUTONOMOUS WORKFLOWS

As artificial intelligence systems continue to evolve, a transformative new capability has emerged: AI agents—software entities capable of autonomously carrying out complex, multi-step tasks by interacting with their environment, executing decisions, and adapting to feedback. Unlike traditional AI tools that respond to single inputs with isolated outputs (e.g., answering a question or generating a paragraph), AI agents operate more like digital workers. They combine language model reasoning with tools such as web browsers, APIs, file systems, databases, or even development environments to complete real-world objectives with minimal human oversight.

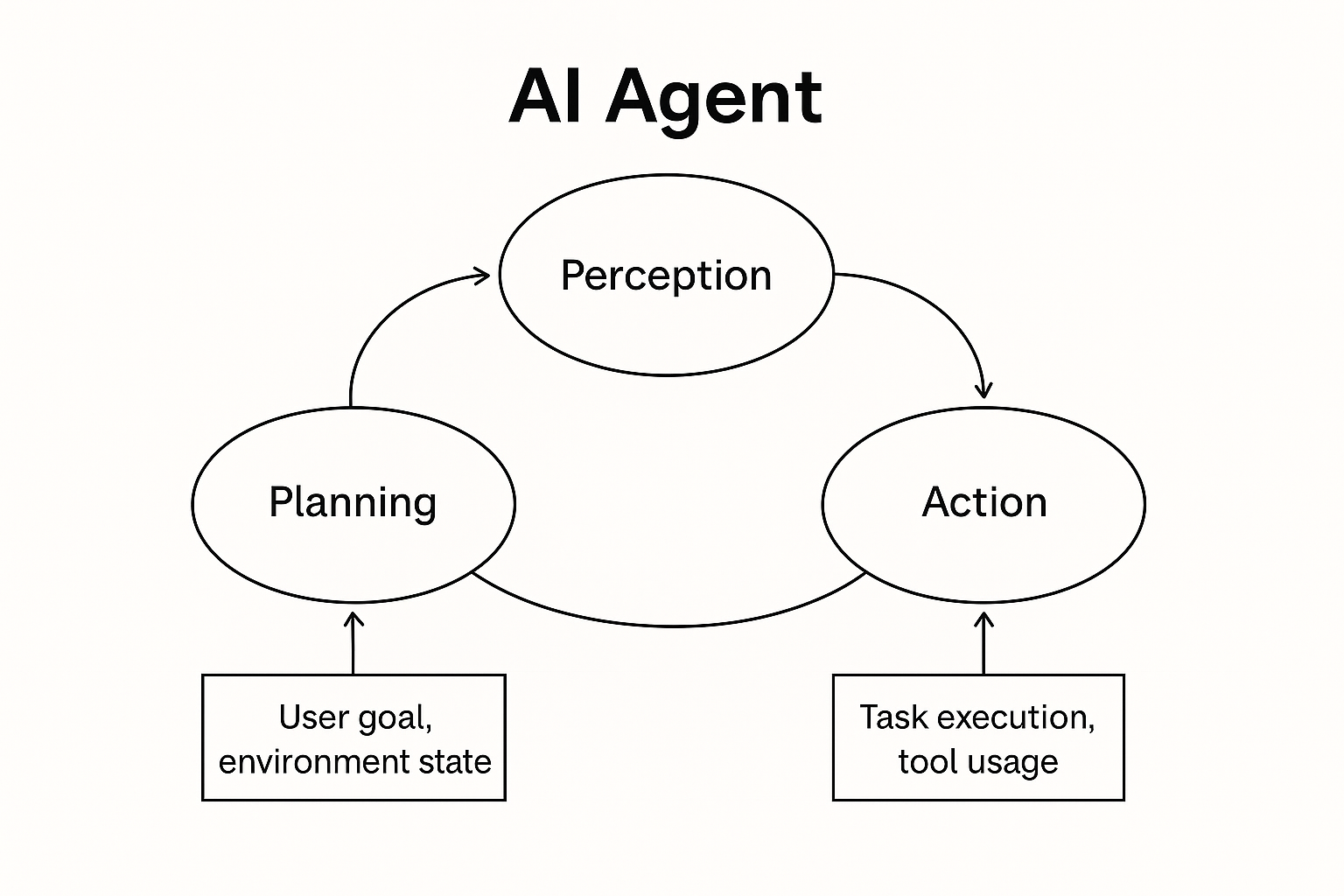

At its core, an AI agent is a system that follows a loop of perception, planning, and action:

At its core, an AI agent is a system that follows a loop of perception, planning, and action:

-

Perception involves understanding the user’s goal and the current state of the environment.

-

Planning refers to generating a step-by-step sequence of tasks or subgoals to accomplish the objective.

-

Action includes executing each step—such as querying a database, writing code, or sending an email—often by invoking external tools or APIs.

Many modern AI agents are powered by large language models (LLMs), which serve as the reasoning engine behind the agent’s ability to plan and adapt. These agents are often designed with memory, allowing them to track progress across tasks, recall user preferences, and refine strategies over time.

Prominent 2025 examples include Auto-GPT, which chains LLM prompts together to autonomously pursue user-defined goals; Devin, an AI software engineer that writes, debugs, and tests code independently; and AgentGPT, which offers customizable agents for productivity, research, and web-based automation.

Autonomous Workflows in Business

In enterprise settings, AI agents are rapidly being deployed to streamline workflows that were previously time-intensive or prone to error. For example:

-

Market research agents can autonomously scan and summarize recent industry reports, extract competitive intelligence, and generate strategy briefs.

-

Customer service agents can triage support tickets, access databases, and draft tailored responses.

-

Executive assistants can schedule meetings, book travel, generate agendas, and manage email threads with persistent memory across interactions.

-

Software development agents can write boilerplate code, refactor legacy systems, generate documentation, and validate software functionality end-to-end.

The key advantage of these agents lies in their ability to interface with various systems—such as CRM platforms, project management tools, or internal knowledge bases—and autonomously coordinate between them, often completing tasks that would otherwise require multiple human collaborators.

Multi-Agent Systems

Beyond standalone agents, multi-agent systems (MAS) represent the next frontier. These involve multiple AI agents interacting with each other—cooperatively, competitively, or hierarchically—to solve complex problems. In a MAS environment, one agent may specialize in data retrieval, another in analysis, and a third in presentation or communication, working together to complete tasks with greater efficiency and modularity.

These systems are inspired by human organizational behavior and distributed problem-solving. For instance, in a financial services firm, one agent might gather market data, another could analyze trends, and a third might prepare an executive briefing. By dividing labor and coordinating efforts, MAS can handle high-complexity tasks with scalability and resilience.

Researchers are also exploring negotiation, task allocation, and self-governing coordination among AI agents—paving the way for adaptive, collaborative systems that operate with minimal human intervention.

Case Study: Multi-Agent System in Enterprise Market Analysis

Scenario: A global consumer electronics firm launches a multi-agent system to support its marketing and strategic planning division. The goal is to automate the weekly generation of a market intelligence briefing that includes competitor analysis, consumer sentiment, and emerging technology trends.

System Composition:

-

Web Crawler Agent: Searches relevant industry news sites, tech blogs, and regulatory filings for updates on competitors, product launches, and pricing changes.

-

Sentiment Analysis Agent: Uses vibe-aware NLP to assess consumer sentiment from social media posts and product reviews across global markets.

-

Trend Detection Agent: Monitors patent databases, venture capital funding flows, and R&D publications to identify emerging technologies.

-

Report Generator Agent: Compiles the collected insights into a formatted executive summary with visualizations and recommendations tailored to different stakeholders (marketing, R&D, executive leadership).

Interaction & Coordination:

-

Each agent operates asynchronously but shares progress through a central task manager that monitors workflow status and handles coordination.

-

If the Web Crawler detects a new product release, it flags the Sentiment Analysis Agent to prioritize social media data related to that product.

-

The Report Generator adapts content formatting depending on which department will review the final briefing.

Outcomes:

-

The time to generate competitive briefings was reduced from 3 days to under 1 hour.

-

Decision-makers reported greater confidence in strategy planning due to faster access to timely, cross-validated insights.

-

The system scaled across product categories and regions with minimal retraining.

This case illustrates how multi-agent collaboration enables modular, scalable, and specialized task execution—each agent performs efficiently in its domain while contributing to a coherent, high-value business output.

Challenges and Considerations

Despite their promise, AI agents introduce new technical and ethical challenges. Ensuring reliability, security, and alignment with user intent is critical, particularly as agents gain access to sensitive systems or execute financial transactions. There’s also a growing need for agent governance frameworks to track decision-making, restrict risky behavior, and enable auditing of autonomous workflows.

Furthermore, agentic systems require robust error recovery mechanisms, real-time monitoring, and transparent boundaries between automation and human control. These are active areas of research and development in both academia and industry.

Conclusion

AI agents and multi-agent systems represent a powerful shift in the application of artificial intelligence—from reactive tools to proactive collaborators. As businesses integrate these agents into daily operations, they stand to unlock significant gains in productivity, scalability, and decision quality. However, realizing this potential will require thoughtful design, interdisciplinary oversight, and a commitment to transparency and control in automated decision-making.

AI in Edge Computing

Edge computing involves processing data closer to the source, reducing latency and the need for centralized cloud processing. Integrating AI into edge devices enables real-time decision-making, making it suitable for applications like IoT devices, autonomous vehicles, and smart infrastructure. By integrating AI into edge computing, we can unlock a whole new level of efficiency and intelligence in various applications. One of the key benefits of AI in edge computing is the ability to make real-time decisions without relying on a centralized cloud. This is particularly important in scenarios where latency is a critical factor.

Edge computing involves processing data closer to the source, reducing latency and the need for centralized cloud processing. Integrating AI into edge devices enables real-time decision-making, making it suitable for applications like IoT devices, autonomous vehicles, and smart infrastructure. By integrating AI into edge computing, we can unlock a whole new level of efficiency and intelligence in various applications. One of the key benefits of AI in edge computing is the ability to make real-time decisions without relying on a centralized cloud. This is particularly important in scenarios where latency is a critical factor.

For instance, in Internet of Things (IoT) devices, having AI capabilities at the edge allows for faster and more efficient data analysis. Instead of sending all the raw data to the cloud for processing, the AI algorithms can be deployed directly on the edge devices. This enables them to analyze the data locally, identify patterns or anomalies, and take immediate actions if necessary.

Autonomous vehicles also greatly benefit from AI in edge computing. These vehicles generate enormous amounts of sensor data that need to be processed and analyzed in real-time to make split-second decisions. By leveraging AI at the edge, these vehicles can process the data locally, reducing the latency and enabling faster response times. This not only improves the safety and reliability of autonomous vehicles but also reduces the dependency on a stable internet connection.

Similarly, smart infrastructure, such as smart cities or industrial automation systems, can leverage AI in edge computing to optimize operations. By deploying AI algorithms directly on the edge devices, these systems can analyze data in real-time and make intelligent decisions without relying on a centralized cloud. This enables faster response times, reduces bandwidth requirements, and enhances overall system efficiency.

Overall, integrating AI into edge computing opens up a wide range of possibilities for real-time decision-making and intelligent automation. By processing data closer to the source, we can reduce latency, improve efficiency, and enable applications that require immediate and intelligent responses. The combination of AI and edge computing is poised to revolutionize various industries and pave the way for a smarter and more connected future.

Federated Learning

Federated learning is a decentralized approach to training machine learning models in which data remains on local devices and only aggregated model updates are shared with a central server. This method reduces the need to collect or store raw data in centralized repositories, offering significant privacy and compliance benefits—especially in domains like healthcare, finance, education, and telecommunications.

Since its introduction, federated learning has evolved from a theoretical privacy-enhancing framework into a deployable architecture, particularly in applications where data sensitivity and user trust are critical. Organizations use federated learning to build models across distributed edge devices, such as smartphones, medical sensors, or banking terminals, without compromising individual data privacy.

Key advantages of federated learning include enhanced data sovereignty, alignment with regulations like GDPR and HIPAA, and the ability to train on highly diverse, real-world data sources. It also supports personalized modeling: each device can fine-tune a global model based on local patterns, enabling more context-aware predictions while still contributing to collective intelligence.

However, adoption of federated learning in enterprise environments has progressed more cautiously than expected, largely due to persistent technical and operational challenges. Device heterogeneity—variations in hardware capabilities, network reliability, and data distributions across clients—can hinder model convergence and reduce overall accuracy. Communication overhead, particularly in low-bandwidth or intermittently connected settings, also remains a limiting factor. To address these issues, techniques such as federated averaging—which aggregates model updates from local devices by averaging their parameters—help reduce synchronization complexity and improve training efficiency. Differential privacy, which adds statistical noise to model updates to protect individual data points, enhances user confidentiality without compromising utility. Secure multi-party computation (SMPC) further strengthens data security by allowing participants to collaboratively compute functions without exposing their private inputs. Despite these advancements, scaling federated learning to millions of devices or cross-institutional networks still demands robust coordination frameworks, adaptive communication protocols, and careful attention to governance, fault tolerance, and regulatory compliance.

More recently, federated learning is being combined with retrieval-augmented generation (RAG) and foundation model fine-tuning, particularly in privacy-sensitive sectors. Retrieval-Augmented Generation (RAG) is a method that combines the strengths of information retrieval with the capabilities of large language models (LLMs) to produce more accurate and contextually grounded responses. Instead of relying solely on a model’s internal memory (which can be incomplete or outdated), RAG works by first retrieving relevant documents or data from an external knowledge base—such as a database, website, or document store—and then generating a response using both the retrieved content and the model’s reasoning abilities. For example, medical institutions may collaboratively refine large language models using local patient data without ever sharing that data with external parties.

Edge-only inference refers to the process of running AI model predictions directly on a local device—such as a smartphone, IoT sensor, or embedded controller—without needing to send data to a remote server or cloud. In this approach, the AI model is pre-trained elsewhere and then deployed to the edge device, where it performs inference (i.e., makes decisions or predictions) using locally collected data. Edge-only inference is common in applications like facial recognition on phones, voice assistants, wearable health monitors, and industrial sensors.

Table: Comparison of Federated Learning, Centralized Learning, and Edge-Only Inference

| Feature / Approach | Federated Learning/RAG | Centralized Learning | Edge-Only Inference |

| Data Location | Remains on local devices; only model updates shared | Data is transferred and stored in a central repository | Data remains local; no model training occurs |

| Privacy Protection | High; complies with data protection regulations | Low; high risk of data exposure | Very high; no data transmission or learning |

| Model Training | Distributed across many edge devices | Conducted in centralized servers | Not applicable (only inference is done) |

| Computational Demand | Shared among distributed devices | Centralized on high-performance infrastructure | Low; only prediction computations on device |

| Personalization Capability | High; models can adapt locally | Moderate; personalization requires separate steps | Limited; uses pre-trained model without adaptation |

| Scalability | Moderate to high (requires orchestration) | High (with enough infrastructure) | High |

| Latency / Real-Time Capabilities | Moderate (dependent on communication cycles) | Low (requires server round-trip) | High; operates in real time |

| Vulnerability to Bias | Lower with diverse local data, but depends on coordination | Higher if central data is not representative | Dependent on pre-trained model quality |

| Use Cases | Healthcare, finance, telecom, predictive text on mobile devices | Image recognition, fraud detection, recommendation systems | Smart home devices, IoT sensors, AR/VR headsets |

Case Study: Federated Learning in Mobile Health Monitoring

Background: A consortium of hospitals and medical device manufacturers aimed to build a predictive model for early detection of cardiac events using data from wearable devices (e.g., heart rate monitors, ECG sensors). Due to the sensitive nature of patient health data and regulatory constraints (HIPAA in the U.S., GDPR in the EU), traditional centralized machine learning was not feasible.

Solution: The stakeholders adopted a federated learning approach. Patient data remained on wearable devices or local hospital servers. Each device trained a local model on recent sensor readings, and only encrypted model updates (not patient data) were sent to a secure central server. These updates were aggregated to refine a global model and periodically redistributed to participating devices.

Benefits:

- Privacy preserved: No raw health data ever left the patient’s device or hospital server.

- Model personalization: Devices fine-tuned the global model based on user-specific patterns (e.g., resting heart rate variations).

- Scalability: Thousands of devices contributed to training without overloading any single data center.

- Improved outcomes: The aggregated model detected early warning signs of cardiac anomalies with higher sensitivity than prior models trained on small centralized datasets.

Challenges Addressed:

- Used differential privacy to further protect model updates.

- Employed adaptive synchronization to handle intermittent connectivity of mobile devices.

- Balanced model accuracy against battery life on wearable devices.

Outcome:

The federated approach led to the successful deployment of a real-time alert system for at-risk patients while maintaining full compliance with data privacy laws. The model’s accuracy exceeded baseline standards from traditional machine learning pipelines trained on small, siloed datasets.

In sum, federated learning remains a promising approach for privacy-preserving, distributed machine learning. While technical and logistical barriers still exist, progress in privacy-preserving computation, edge optimization, and cross-silo collaboration is steadily expanding its feasibility and relevance across industries.

GENERATIVE AI FOR CODING AND “VIBE CODING”

One of the most transformative applications of generative AI in recent years is its use in software development. Large language models (LLMs), fine-tuned for code generation—such as GitHub Copilot (powered by OpenAI), CodeWhisperer (Amazon), and Claude (Anthropic)—are now capable of writing functional code in multiple languages, explaining code logic, fixing bugs, and even generating entire applications based on natural language descriptions. These tools are reshaping how software is designed, built, and maintained across industries.

Generative AI as a Coding Partner

AI-assisted coding tools work by predicting the most likely next line of code given a prompt, much like predictive text in writing—but applied to structured programming languages such as Python, JavaScript, SQL, and Java. They support:

-

Code autocompletion: Writing boilerplate code, suggesting variable names, or completing functions.

-

Bug detection and remediation: Highlighting and correcting syntax errors or logic flaws.

-

Code explanation and documentation: Translating code into plain language explanations for maintainability or onboarding.

-

Test generation: Creating unit tests and sample input-output scenarios.

These features significantly improve productivity for experienced developers while reducing errors and development cycle times.

The Rise of “Vibe Coding”

A notable trend enabled by generative AI is “vibe coding”—a colloquial term referring to the ability of non-programmers to build working software by describing what they want in plain language. Rather than writing code manually, users express the intended functionality or behavior of an app, script, or workflow—essentially conveying the “vibe” of what the software should do.

For example:

-

A marketing analyst might prompt an AI tool:

“Create a dashboard that visualizes weekly customer acquisition trends with a filter for marketing channel.”

The AI returns functional code (e.g., Python with Plotly or a Streamlit app) that can be run or further refined.

-

A small business owner might ask:

“Build a form that collects customer feedback and sends it to my email and Google Sheets.”

The system produces the necessary HTML, JavaScript, and backend script using tools like Node.js or Google Apps Script.

This approach dramatically lowers the barrier to entry for digital creation and automation. Paired with no-code platforms or low-code environments, generative AI empowers non-technical users to prototype tools, automate tasks, or launch web-based services without hiring a developer or learning a programming language.

Implications for Business and Education

Generative coding tools are revolutionizing both professional development environments and business operations. Companies are integrating these models into their internal platforms, enabling staff to generate internal tools, process automations, and analytics dashboards with limited IT support.

In education, students in fields like business, data science, or engineering can focus more on logic and design while using AI to assist with syntax and code structure. This supports broader digital literacy and accelerates experimentation.

Challenges and Limitations

While generative AI reduces technical barriers, it introduces new concerns:

-

Code quality and security: AI may generate insecure or inefficient code if not reviewed.

-

Over-reliance: Users may adopt code they don’t fully understand, leading to maintenance or compliance risks.

-

Intellectual property and licensing: There are ongoing debates over whether AI-generated code is truly original or derived from training data.

Therefore, human oversight, testing, and iterative improvement remain essential—particularly for production-grade or customer-facing applications.

In summary, generative AI is democratizing access to software creation. From expert developers seeking productivity gains to non-coders engaging in “vibe coding,” the ability to describe intent and receive working software is reshaping how individuals and organizations solve problems, innovate, and build digital experiences.

AI in Robotics and Automation

AI is playing a crucial role in advancing robotics and automation. Intelligent robotic systems, equipped with machine learning algorithms, are becoming more adept at tasks ranging from manufacturing and logistics to healthcare and service industries. These AI-powered robots are capable of analyzing data, making decisions, and adapting to changing environments. They can perform complex tasks with precision and efficiency, reducing the need for human intervention.

AI is playing a crucial role in advancing robotics and automation. Intelligent robotic systems, equipped with machine learning algorithms, are becoming more adept at tasks ranging from manufacturing and logistics to healthcare and service industries. These AI-powered robots are capable of analyzing data, making decisions, and adapting to changing environments. They can perform complex tasks with precision and efficiency, reducing the need for human intervention.

In manufacturing, AI robots are revolutionizing the production line. They can work alongside humans, assisting in repetitive and physically demanding tasks. With their ability to learn from experience, these robots can optimize processes, improve productivity, and minimize errors.

In logistics, AI robots are transforming warehouses and distribution centers. They can autonomously navigate through complex environments, pick and pack items, and even collaborate with human workers. This not only speeds up operations but also reduces costs and improves customer satisfaction.

In healthcare, AI robots are enhancing patient care and assisting medical professionals. They can monitor vital signs, provide reminders for medication, and even perform simple procedures. These robots can also collect and analyze patient data, enabling healthcare providers to make more informed decisions.

In the service industry, AI robots are being used in various applications, such as customer service and hospitality. They can interact with customers, answer queries, and provide personalized recommendations. These robots are also capable of learning from customer interactions, improving their performance over time.

Overall, AI in robotics and automation is revolutionizing industries by increasing efficiency, improving accuracy, and enhancing productivity. As technology continues to advance, we can expect to see even more sophisticated AI-powered robots that can handle complex tasks and contribute to a more automated future.

AI-driven Drug Discovery

AI is playing a significant role in drug discovery and development. Machine learning models can analyze vast datasets to identify potential drug candidates, predict their effectiveness, and optimize the drug discovery process, reducing time and costs.

AI is playing a significant role in drug discovery and development. Machine learning models can analyze vast datasets to identify potential drug candidates, predict their effectiveness, and optimize the drug discovery process, reducing time and costs.

AI is revolutionizing the field of personalized medicine by analyzing individual patient data, including genetic information, medical history, and lifestyle factors. Machine learning algorithms can identify patterns and make predictions to help healthcare professionals tailor treatments and interventions for each patient, improving outcomes and reducing adverse effects.

AI for Climate Change Solutions

AI is being leveraged to address environmental challenges, including climate change. Machine learning models are used for climate modeling, resource optimization, and analyzing environmental data to develop sustainable solutions and mitigate the impact of climate change. These AI-powered climate change solutions have the potential to revolutionize the way we approach environmental issues. By utilizing machine learning algorithms, AI can analyze vast amounts of data and identify patterns that humans may not be able to detect. This enables scientists and policymakers to make more informed decisions and develop effective strategies to combat climate change.

One area where AI is making a significant impact is in climate modeling. AI algorithms can simulate complex climate systems and predict future scenarios based on various inputs. This allows scientists to understand how different factors, such as greenhouse gas emissions or deforestation, will affect the climate in the long term. By having accurate climate models, we can better anticipate the consequences of our actions and take proactive measures to mitigate their impact.

Furthermore, AI is helping optimize resource allocation and management. By analyzing data on energy consumption, water usage, and waste production, AI algorithms can identify inefficiencies and suggest ways to reduce resource consumption. This can lead to more sustainable practices in industries such as agriculture, manufacturing, and transportation, ultimately reducing our carbon footprint.

Another valuable application of AI in addressing climate change is in analyzing environmental data. With the help of AI, scientists can process and interpret vast amounts of data collected from satellites, sensors, and other sources. This data can provide valuable insights into the state of our environment, including changes in temperature, sea levels, air quality, and biodiversity. By understanding these changes, we can develop targeted strategies to protect vulnerable ecosystems and species.

In conclusion, AI is playing a crucial role in developing innovative solutions to combat climate change. By leveraging machine learning models, AI can provide accurate climate predictions, optimize resource management, and analyze environmental data. With continued advancements in AI technology, we have the potential to make significant progress in mitigating the impact of climate change and creating a more sustainable future.

AI in Cybersecurity

AI is increasingly being employed in cybersecurity for threat detection, anomaly detection, and real-time response. Machine learning algorithms can analyze large datasets to identify patterns indicative of cyber threats and enhance overall cybersecurity measures.

AI is increasingly being employed in cybersecurity for threat detection, anomaly detection, and real-time response. Machine learning algorithms can analyze large datasets to identify patterns indicative of cyber threats and enhance overall cybersecurity measures.

AI-powered Personalization: AI is revolutionizing personalized user experiences in various domains, including content recommendations, e-commerce, and healthcare. Advanced algorithms analyze user behavior to tailor products, services, and content to individual preferences, enhancing user satisfaction.

These emerging trends and technologies reflect the dynamic nature of the AI/ML field and its continuous evolution. As these developments unfold, they are likely to shape the future landscape of technology, industry, and society.

Quantum Machine Learning

Though still highly experimental, the intersection of quantum computing and machine learning holds promise for solving complex problems exponentially faster than classical computers. Quantum machine learning algorithms may offer significant advantages in areas like optimization, cryptography, and large-scale data analysis. Quantum machine learning is an emerging field that combines the principles of quantum computing with the techniques of machine learning. By harnessing the power of quantum mechanics, these algorithms have the potential to revolutionize various industries.

Though still highly experimental, the intersection of quantum computing and machine learning holds promise for solving complex problems exponentially faster than classical computers. Quantum machine learning algorithms may offer significant advantages in areas like optimization, cryptography, and large-scale data analysis. Quantum machine learning is an emerging field that combines the principles of quantum computing with the techniques of machine learning. By harnessing the power of quantum mechanics, these algorithms have the potential to revolutionize various industries.

One of the key advantages of quantum machine learning is its ability to solve optimization problems more efficiently. Traditional optimization algorithms often struggle with large-scale problems, but quantum algorithms can explore multiple solutions simultaneously, leading to faster and more accurate results. This capability can be particularly beneficial in fields such as logistics, finance, and transportation, where finding the best solution among countless possibilities is crucial.

Another area where quantum machine learning shows promise is cryptography. Quantum computers have the potential to break many of the encryption methods used today, but they can also provide new cryptographic techniques that are resistant to attacks by classical computers. By leveraging quantum machine learning, researchers can develop advanced encryption algorithms that are more secure and robust, ensuring the confidentiality and integrity of sensitive data.

Large-scale data analysis is another domain where quantum machine learning can make a significant impact. With the exponential growth of data, traditional machine learning algorithms often struggle to process and analyze vast amounts of information efficiently. Quantum algorithms, on the other hand, can handle massive datasets more effectively, enabling faster and more accurate insights. This can be particularly useful in fields like healthcare, finance, and scientific research, where making sense of complex data is crucial for decision-making and discovery.

However, despite its immense potential, quantum machine learning is still in its early stages. The development of practical quantum computers and the optimization of quantum algorithms are ongoing challenges. Additionally, the integration of quantum machine learning techniques into existing machine learning frameworks and infrastructure requires further research and development.

While researchers and industry experts are actively exploring the possibilities of quantum machine learning and are optimistic about its future, as of 2025, classical ML has continued to outpace quantum ML for most practical applications.

Barriers to future AI Development

Certainly, while the future of AI/ML holds great promise, there are several challenges and barriers that must be addressed to fully realize these exciting trends. Some of these barriers are ethical and performance issues in current AI systems that must be overcome to move forward. Other barriers may result from current or future legal or regulatory requirements. Here are some examples of the key barriers today:

- Data Privacy and Security Concerns: The increasing reliance on large datasets raises concerns about data privacy and security. Ensuring that sensitive information is handled responsibly and protected from unauthorized access is crucial.

- Bias and Fairness in AI Systems: AI models can inadvertently perpetuate biases present in training data, leading to unfair outcomes. Eliminating biases and ensuring fairness in AI systems is a complex challenge that requires ongoing efforts in data curation, model development, and evaluation.

- Lack of Standardization: The absence of standardized practices and frameworks can hinder interoperability and collaboration. Establishing common standards for model development, data sharing, and ethical considerations is essential for fostering a cohesive AI ecosystem.

- Explainability and Interpretability: Complex AI models, particularly deep learning models, are often considered “black boxes” with limited interpretability. Achieving explainability in AI systems is crucial for gaining user trust and addressing regulatory requirements.

- Resource Intensiveness: Training advanced AI models, especially large-scale ones, requires substantial computing resources and energy. The environmental impact and resource intensiveness of AI development need to be addressed to ensure sustainability.

- Ethical and Governance Challenges: The ethical implications of AI, including issues related to accountability, transparency, and responsible deployment, pose challenges for policymakers, organizations, and developers. Developing comprehensive governance frameworks is essential to address these concerns.

- Algorithmic Accountability: Determining accountability for AI decisions and actions, especially in cases of unintended consequences or errors, remains a complex challenge. Clarifying and establishing responsibility frameworks is crucial for addressing accountability concerns.

- Lack of Diversity in AI Development: The lack of diversity in the AI development workforce can result in biased algorithms and technologies that do not consider the needs and perspectives of diverse user groups. Promoting diversity in the field is crucial for building inclusive and unbiased AI systems.

- Interdisciplinary Collaboration: AI development often requires collaboration between experts from diverse fields such as computer science, ethics, law, and domain-specific industries. Encouraging interdisciplinary collaboration is essential to tackle complex challenges associated with AI.

- Regulatory Uncertainty: Rapid advancements in AI have outpaced the development of comprehensive regulatory frameworks. Uncertainty about regulatory requirements can hinder innovation and adoption. Establishing clear and adaptable regulations is crucial for fostering responsible AI development.

- Education and Skills Gap: There is a growing demand for skilled professionals in AI development, but there is also a shortage of individuals with the necessary expertise. Bridging the education and skills gap is crucial for building a workforce capable of advancing AI technologies responsibly.

Addressing these barriers requires collaborative efforts from researchers, policymakers, industry leaders, and the broader community. By proactively addressing these challenges, the AI/ML community can work towards unlocking the full potential of these emerging trends while ensuring ethical, responsible, and inclusive practices.

Chapter Summary

This chapter delves into the emerging trends in the field of Artificial Intelligence (AI) and Machine Learning (ML), highlighting the dynamic nature of these disciplines and their continuous evolution. It discusses several key topics, including generative AI, diversity in AI development, algorithmic accountability, quantum machine learning, and federative learning.

Generative AI, exemplified by models like OpenAI’s ChatGPT, is identified as a powerful tool for content creation, creative industries, and the development of realistic virtual environments. The technology’s ability to generate human-like text, images, and even code can revolutionize various fields. However, the chapter also underscores the ethical considerations raised by generative AI, such as questions about intellectual property, authenticity, and the potential for misuse. It emphasizes the necessity to balance the positive use of generative AI with responsible and ethical practices.

The chapter also highlights the lack of diversity in the AI development workforce. It argues that this lack of diversity can lead to biased algorithms and technologies that fail to consider the needs and perspectives of diverse user groups. Promoting diversity in the field is therefore crucial for building inclusive and unbiased AI systems.

Algorithmic accountability is another critical issue discussed in the chapter. Determining accountability for AI decisions and actions, especially in cases of unintended consequences or errors, remains a complex challenge. The chapter underscores the importance of clarifying and establishing responsibility frameworks to address these accountability concerns.

The intersection of quantum computing and machine learning, known as quantum machine learning, is identified as a promising area for solving complex problems exponentially faster than classical computers. However, it is noted that the field is still in its early stages, with ongoing challenges in the development of practical quantum computers and the optimization of quantum algorithms.

The chapter also presents federated learning as a promising approach for enabling privacy-preserving and collaborative machine learning. However, it notes that coordinating the training process across multiple devices can be complex and requires robust algorithms and protocols to handle potential issues.

In conclusion, the chapter emphasizes that these emerging trends and technologies are likely to shape the future landscape of technology, industry, and society. However, it also points to the barriers to future AI development, including biases, lack of diversity, accountability issues, and technical challenges. The chapter calls for ongoing efforts in research and development to overcome these barriers and harness the full potential of AI/ML technologies.

Discussion Questions

- How do reasoning models differ from traditional predictive models, and in what ways might they improve decision-making in business strategy?

-

AI agents can now plan, execute, and revise tasks autonomously—what types of jobs or functions are most likely to be augmented or displaced by agentic systems in the next five years?

- “Vibe coding” enables non-programmers to create software using natural language—how might this shift the boundary between technical and non-technical roles in organizations?

- In what ways can retrieval-augmented generation (RAG) and tool-augmented reasoning improve the trustworthiness of generative AI systems in regulated industries such as healthcare or finance?

- Compare and contrast federated learning and centralized learning in terms of data privacy, performance, and scalability—when would each be most appropriate for a business?

- How do multi-agent systems reflect organizational dynamics, and what design principles should be considered when coordinating autonomous agents in complex workflows?

- As generative AI tools become more integrated into content creation, code development, and media production, what ethical safeguards should organizations put in place to ensure responsible use?

- Reasoning models are becoming more transparent and interpretable—how might this influence how regulators or stakeholders assess the fairness or accountability of AI decisions?

- With the rise of multimodal NLP and sentiment nuance detection, how might businesses better understand customer experience or employee well-being beyond traditional analytics?

- Looking at the broad ecosystem of emerging technologies—generative AI, reasoning models, autonomous agents, and federated systems—what new leadership or management skills will be essential in AI-enabled organizations?