2 Historical Context and Evolution of AI/ML: A Journey Through Time

Learning Objectives

- Understand the historical journey and evolution of AI and ML, including the roles of key figures like Alan Turing and John McCarthy.

- Describe the capabilities and characteristics that define Artificial General Intelligence (AGI), such as learning capability, reasoning and problem-solving, understanding natural language, transfer learning, and self-awareness and autonomy.

- Assess the ethical considerations for AI systems, including transparency, accountability, and bias.

- Understand the concept and significance of evaluation metrics in assessing the performance of AI models in language understanding and generation.

- Explain the challenges and advancements in AI systems for text-based interactions and their ability to produce human-like responses.

History of AI/ML

The roots of Artificial Intelligence (AI) and Machine Learning (ML) trace back to a rich tapestry of human ingenuity, mathematical theories, and technological milestones. Understanding the historical context and evolution of these transformative technologies provides invaluable insights into their current capabilities and future potential.

The Birth of Artificial Intelligence

The seeds of AI were sown in the mid-20th century, as pioneers like Alan Turing and John McCarthy laid the foundation for a field that sought to replicate human intelligence through machines. The groundbreaking Dartmouth Conference in 1956 marked the official birth of AI, setting the stage for decades of exploration and innovation.

The seeds of AI were sown in the mid-20th century, as pioneers like Alan Turing and John McCarthy laid the foundation for a field that sought to replicate human intelligence through machines. The groundbreaking Dartmouth Conference in 1956 marked the official birth of AI, setting the stage for decades of exploration and innovation.

Early Challenges and Symbolic AI

The early years of AI were marked by optimism and ambitious goals, yet progress was slow. Symbolic AI, which focused on rule-based systems and explicit programming, dominated this period. Researchers grappled with challenges such as natural language processing and the symbolic representation of knowledge, laying the groundwork for subsequent developments.

The Rise of Machine Learning

As the limitations of symbolic AI became apparent, a paradigm shift occurred with the rise of Machine Learning in the 1980s. Researchers embraced a data-driven approach, allowing machines to learn patterns and make predictions without explicit programming. This shift heralded a new era, with algorithms evolving to adapt and improve based on experience.

The Renaissance of Neural Networks

Despite initial enthusiasm, the field experienced a period of stagnation known as the “AI winter” in the late 20th century. The resurgence came in the 2010s, fueled by the renaissance of Neural Networks and the advent of deep learning. Breakthroughs in computational power and the availability of vast datasets propelled neural networks to unprecedented heights, enabling the development of powerful models capable of tasks ranging from image recognition to natural language understanding.

AI in the 21st Century

The 21st century witnessed an explosion of AI applications in various domains. From virtual assistants on smartphones to recommendation systems powering online platforms, AI became an integral part of daily life. Machine Learning algorithms, particularly in the form of deep neural networks, demonstrated remarkable achievements, exceeding human performance in specific tasks.

Large Language Models (LLMs) and Conversational AI

In recent years, Large Language Models (LLMs) like ChatGPT have garnered attention for their ability to generate human-like text. These models, powered by advanced neural architectures, have revolutionized natural language understanding and generation, enabling more sophisticated and context-aware interactions between machines and humans.

The Uncharted Future

As we stand on the cusp of the third decade of the 21st century, the historical journey of AI and ML continues to unfold. Breakthroughs in quantum computing, ethical considerations, and the pursuit of Artificial General Intelligence (AGI) pose new challenges and opportunities. The historical context serves as a compass, guiding us through the evolution of AI/ML and inspiring the next chapter in this captivating narrative of human innovation and technological advancement.

AI Pioneers: Alan Turing and John McCarthy

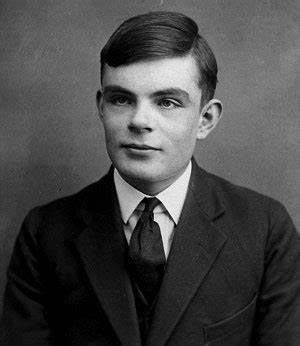

Alan Turing

Alan Mathison Turing (1912–1954) was a British mathematician, logician, and computer scientist. Born on June 23, 1912, in Maida Vale, London, Turing showed early signs of exceptional mathematical talent. During World War II, he played a crucial role in breaking the German Enigma code at Bletchley Park, contributing significantly to Allied efforts.

Alan Mathison Turing (1912–1954) was a British mathematician, logician, and computer scientist. Born on June 23, 1912, in Maida Vale, London, Turing showed early signs of exceptional mathematical talent. During World War II, he played a crucial role in breaking the German Enigma code at Bletchley Park, contributing significantly to Allied efforts.

Turing is widely regarded as the father of theoretical computer science and artificial intelligence. In 1950, he proposed the concept of the Turing Test in his paper “Computing Machinery and Intelligence,” suggesting a criterion for determining a machine’s ability to exhibit intelligent behavior indistinguishable from that of a human.

Turing’s influence on AI/ML is profound. His work laid the theoretical foundation for modern computer science, including the development of algorithms and the concept of a universal machine. The Turing Test became a benchmark for AI researchers, stimulating discussions about machine intelligence and consciousness. Turing’s visionary ideas continue to shape the philosophical and technical aspects of artificial intelligence.

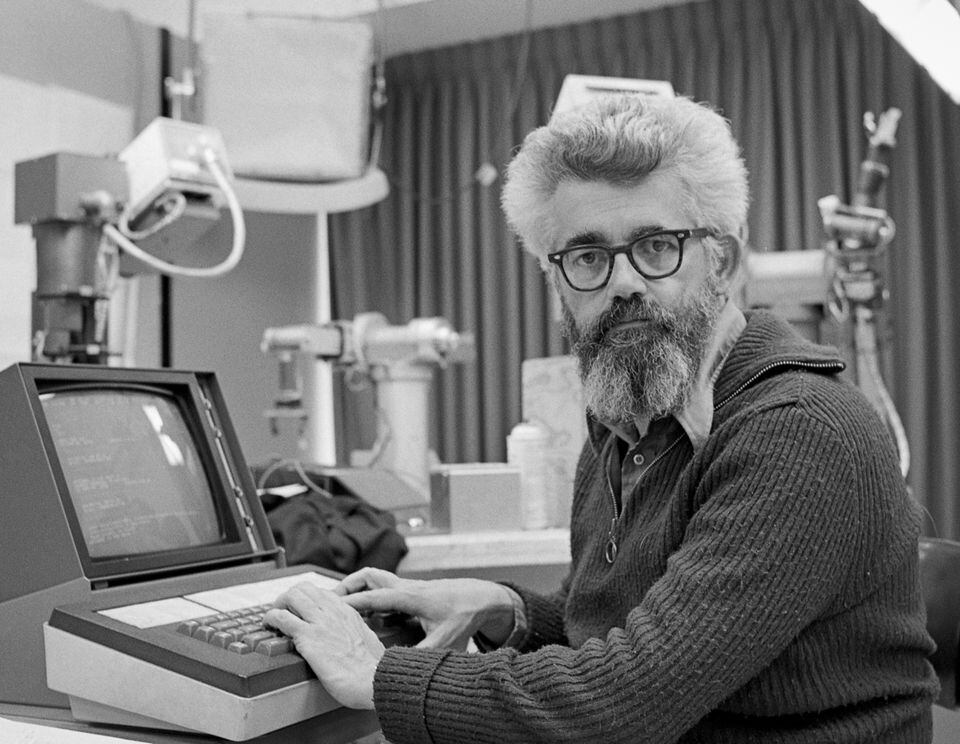

John McCarthy

John McCarthy (1927–2011) was an American computer scientist and cognitive scientist. Born on September 4, 1927, in Boston, Massachusetts, McCarthy earned his Ph.D. in mathematics from Princeton University. He held academic positions at various institutions, including Stanford University, where he founded the Stanford Artificial Intelligence Laboratory (SAIL).

John McCarthy (1927–2011) was an American computer scientist and cognitive scientist. Born on September 4, 1927, in Boston, Massachusetts, McCarthy earned his Ph.D. in mathematics from Princeton University. He held academic positions at various institutions, including Stanford University, where he founded the Stanford Artificial Intelligence Laboratory (SAIL).

McCarthy is best known for coining the term “artificial intelligence” (AI) in 1955, during the Dartmouth Conference, which he organized. He also developed the programming language LISP (List Processing), which became instrumental in AI research. McCarthy received the Turing Award in 1971 for his contributions to the field.

John McCarthy played a pivotal role in shaping the field of AI. His introduction of the term “artificial intelligence” marked the beginning of AI as a distinct discipline. McCarthy’s development of LISP provided a powerful tool for AI researchers, facilitating the implementation of symbolic reasoning and problem-solving techniques. His leadership at the Dartmouth Conference laid the groundwork for AI as an interdisciplinary field and established AI as a legitimate area of study and research. McCarthy’s lasting impact is reflected in the ongoing advancements and applications of artificial intelligence.

Modern AI/ML Enabling Technologies

Modern AI is the result of various technological advances and breakthroughs across multiple domains. Several key advancements have played pivotal roles in making contemporary AI systems possible. Here are some of the critical technology developments that have contributed to the evolution of modern AI:

Computational Power: The exponential increase in computational power, driven by advances in hardware, particularly GPUs (Graphics Processing Units), has been instrumental in training large and complex neural networks. This increase in processing capabilities has enabled the training of deep learning models at unprecedented scales.

Computational Power: The exponential increase in computational power, driven by advances in hardware, particularly GPUs (Graphics Processing Units), has been instrumental in training large and complex neural networks. This increase in processing capabilities has enabled the training of deep learning models at unprecedented scales.- Data Availability and Big Data: The availability of vast amounts of labeled and unlabeled data, often referred to as big data, has been crucial for training machine learning models, especially deep neural networks. Large datasets enable more accurate and robust model training, allowing AI systems to learn complex patterns and relationships.

- Algorithms and Neural Networks: Advances in machine learning algorithms, especially the development of deep learning techniques and neural network architectures, have significantly improved the capabilities of AI systems. Convolutional Neural Networks (CNNs) for image processing, Recurrent Neural Networks (RNNs) for sequential data, and Transformer models have been key innovations.

- Backpropagation and Training Techniques: The development and widespread adoption of backpropagation as a training algorithm for neural networks have been pivotal. Additionally, advancements in optimization techniques, regularization methods, and the use of pre-training and transfer learning have enhanced the efficiency of training deep models.

- Natural Language Processing (NLP): Progress in NLP has been driven by innovations such as word embeddings, attention mechanisms, and transformer architectures. These advancements have significantly improved the ability of AI systems to understand and generate human-like language.

- Reinforcement Learning: Developments in reinforcement learning algorithms and methodologies have enabled AI systems to learn optimal decision-making strategies in dynamic environments. Deep reinforcement learning, combining deep neural networks with reinforcement learning, has been particularly influential.

- Generative Adversarial Networks (GANs): GANs, introduced by Ian Goodfellow and his colleagues, have revolutionized the field of generative modeling. GANs consist of two neural networks (generator and discriminator) engaged in a adversarial training process, leading to the generation of realistic synthetic data.

- Transfer Learning: The concept of transfer learning, where a model trained on one task is adapted to perform another related task, has allowed AI systems to leverage knowledge gained in one domain for improved performance in another. This has been crucial for training models with limited labeled data.

- Cloud Computing and Distributed Computing: The availability of cloud computing services and distributed computing frameworks has facilitated the efficient training and deployment of AI models at scale. Organizations can leverage cloud resources to handle large datasets and compute-intensive tasks.

- Open-Source Frameworks: The widespread adoption of open-source machine learning frameworks like TensorFlow and PyTorch has democratized AI research and development. These frameworks provide accessible tools for building and deploying AI models.

- Interdisciplinary Collaboration: Advances in AI have been propelled by interdisciplinary collaboration between researchers, engineers, and domain experts. The integration of expertise from computer science, mathematics, neuroscience, and other fields has led to innovative approaches and solutions.

The synergy of these technological advances has transformed AI from a theoretical concept to a practical and impactful technology with applications in various domains, including computer vision, natural language processing, healthcare, finance, and more. Ongoing research and technological developments continue to shape the landscape of modern AI.

Artificial General Intelligence

Today’s AI systems are very specialized. Conjecture, of course, leads to extrapolating when these systems will be able to mimic human intelligence. This “generalized” AI, also known as Artificial General Intelligence (AGI), would be a type of artificial intelligence that possesses the ability to understand, learn, and apply knowledge across a wide range of tasks, domains, and contexts, mirroring the broad cognitive abilities of human intelligence. Unlike today’s narrow, or specialized, AI systems that excel in specific tasks, AGI aims to exhibit a level of general intelligence comparable to human intelligence.

Today’s AI systems are very specialized. Conjecture, of course, leads to extrapolating when these systems will be able to mimic human intelligence. This “generalized” AI, also known as Artificial General Intelligence (AGI), would be a type of artificial intelligence that possesses the ability to understand, learn, and apply knowledge across a wide range of tasks, domains, and contexts, mirroring the broad cognitive abilities of human intelligence. Unlike today’s narrow, or specialized, AI systems that excel in specific tasks, AGI aims to exhibit a level of general intelligence comparable to human intelligence.

Key characteristics of Generalized AI (AGI) would include:

- Versatility: AGI is designed to perform any intellectual task that a human being can. It should have the flexibility to apply its intelligence to diverse domains without requiring task-specific programming.

- Learning Capability: AGI should be capable of learning from experience and adapting to new situations. This involves not only acquiring knowledge in specific domains but also transferring knowledge and skills across different areas.

- Reasoning and Problem-Solving: AGI should demonstrate advanced reasoning abilities, including the ability to understand complex problems, formulate strategies, and solve problems in various contexts.

- Understanding Natural Language: Generalized AI should understand and generate natural language, allowing for effective communication with humans in a manner similar to how humans communicate with each other.

- Common Sense Reasoning: AGI should possess a form of common sense reasoning, allowing it to make inferences, understand context, and apply background knowledge to tasks.

- Self-Awareness and Autonomy: While not universally agreed upon, some definitions of AGI include aspects of self-awareness and autonomy, allowing the system to reflect on its own state and make decisions independently.

- Transfer Learning: AGI should be able to transfer knowledge and skills learned in one domain to another, exhibiting a form of generalization that goes beyond task-specific learning.

It’s important to note that achieving true AGI remains a challenging goal and is an active area of research in the field of artificial intelligence. As of this writing, no system has reached the level of generalized intelligence equivalent to that of humans. Most existing AI systems are considered narrow or specialized, excelling in specific tasks but lacking the broad cognitive abilities associated with AGI. The development of AGI raises ethical, societal, and technical considerations that researchers and regulators continue to explore.

The Turing Test

The Turing Test, proposed by the British mathematician and computer scientist Alan Turing in 1950, is a test of a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. The test is designed to assess a machine’s capability to engage in natural language conversation and mimic human-like responses.

Here’s an overview of the Turing Test:

- Imitation of Human Behavior: The central idea of the Turing Test is for a human judge to engage in a natural language conversation with both a machine and another human without knowing which is which.

- Concealed Identities: The identities of the participants (human and machine) are concealed from the judge during the conversation. The judge is only aware that one participant is a machine, and the other is a human.

- Text-Based Interaction: The interaction typically occurs through text-based communication to eliminate any influence from physical appearance or other sensory cues.

If the judge cannot reliably distinguish between the machine and human responses based on the conversation, the machine is said to have passed the Turing Test. However, passing the Turing Test doesn’t imply that the machine possesses consciousness, self-awareness, or understanding. It simply indicates a level of conversational ability that is indistinguishable from human behavior.

If the judge cannot reliably distinguish between the machine and human responses based on the conversation, the machine is said to have passed the Turing Test. However, passing the Turing Test doesn’t imply that the machine possesses consciousness, self-awareness, or understanding. It simply indicates a level of conversational ability that is indistinguishable from human behavior.

The Turing Test has been influential in the development of artificial intelligence, serving as a benchmark for assessing the progress of AI systems in natural language processing and communication. Critics argue that passing the Turing Test doesn’t necessarily demonstrate true intelligence or understanding; it only reflects the ability to generate human-like responses within a specific context. The test also raises philosophical questions about the nature of consciousness, as it focuses on external behavior rather than internal cognitive processes. Despite its limitations, the Turing Test remains a landmark concept in the field of artificial intelligence and continues to stimulate discussions about the nature of intelligence and the potential for machines to exhibit human-like behavior.

Today, the scientific consensus on whether modern AI systems meet the Turing Test is nuanced. As of this writing, the field of artificial intelligence has made significant progress in natural language processing, and some AI systems can produce responses in text-based conversations that are quite sophisticated. However, meeting the full criteria of the Turing Test, especially in a broader sense, remains a challenging task.

Here are key points to consider regarding the scientific consensus:

- Narrow Contexts: In narrow contexts or specific domains, AI systems, including chatbots and virtual assistants, can generate human-like responses effectively. These systems often excel in well-defined tasks and provide useful interactions.

- Limited Understanding: While AI models demonstrate impressive language generation capabilities, they often lack true understanding or consciousness. They rely on patterns learned from vast datasets and may produce plausible-sounding responses without genuine comprehension.

- Context Dependence: The Turing Test, in its original form, involves a broad and open-ended conversation. Modern AI systems may struggle in open-ended contexts or fail to understand nuances, context shifts, or abstract concepts beyond their training data.

- Evaluation Metrics: The scientific community has developed various metrics to assess the performance of AI models in language understanding and generation. Metrics like BLEU score and ROUGE are used, but they focus on specific aspects of language quality and do not capture the full richness of human-like conversation.

- Ethical Considerations: The scientific community is also considering ethical dimensions of AI, including transparency, accountability, and bias. Ensuring responsible AI behavior is part of ongoing discussions and research.

- Continuous Development: AI research and development are dynamic fields, and models are continually evolving. The capabilities of AI systems are likely to improve over time, but achieving a level of understanding and reasoning comparable to human intelligence remains an open challenge.

It’s essential to note that the concept of the Turing Test itself has been subject to criticism and debate within the scientific community. Some argue that focusing solely on human-like conversation may not be the most meaningful measure of AI intelligence.

As the field progresses, researchers are exploring new evaluation methods that go beyond the limitations of the Turing Test to better assess the true capabilities and limitations of AI systems. Ongoing discussions within the scientific community and advancements in research contribute to shaping the understanding of AI’s current state and future potential.

Chapter Summary

The chapter focuses on the basic concepts of algorithms, models, and learning. It begins by explaining how linear regression can be used to predict outcomes based on a set of data. This is demonstrated by the example of predicting test scores based on study hours. The process involves four steps: data collection, finding the line of best fit through the data points, making predictions using this line, and testing the rule by comparing predicted test scores with the actual ones.

The chapter then moves on to decision-tree algorithms, likening them to choosing a path in a forest. Each decision or “node” in the tree represents a choice based on certain conditions, such as the type of tree at the start of the path. Following the signs or nodes eventually leads to a destination, demonstrating how decision trees help in making choices.

The concept of neural networks is introduced next, with an analogy of teaching a computer to recognize animals in the same way a smart 5th-grade friend does. The process involves learning from pictures, identifying features of each animal, and training the computer by showing it many pictures of animals and telling it what each animal is.

Finally, the chapter discusses the concept of finding centers in data groups. This is illustrated by using candies as data points and selecting a candy from each group that best represents the average color of the candies in its group. This process is likened to finding the center point that best represents its group.

In summary, the chapter provides a comprehensive introduction to basic concepts in algorithms and machine learning, including linear regression, decision-tree algorithms, neural networks, and finding centers in data groups. These concepts are explained using everyday analogies, making them accessible and easy to understand.

Chapter Discussion Questions

- How has the historical journey of AI and ML influenced the current state of these technologies?

- What are the key characteristics that define Artificial General Intelligence (AGI) and why are they important?

- Discuss the ethical considerations in AI. How do transparency, accountability, and bias play a role in AI development and implementation?

- What are the evaluation metrics used to assess the performance of AI models in language understanding and generation? Discuss their significance and limitations.

- How do AI systems handle text-based interactions? Discuss the sophistication of their responses and the challenges they face.

- How do large language models like ChatGPT revolutionize natural language understanding and generation?

- What is the role of symbolic AI in the early years of AI development and what were the challenges faced?

- Discuss the concept of transfer learning in AGI and its importance.

- What are the key points to consider regarding the scientific consensus on AI systems?

- Discuss the concept of self-awareness and autonomy in AGI. Why is it a controversial aspect?